In this ECPS interview, Professor Daniel Treisman examines how Trump’s political style intersects with the logic of informational autocracy and democratic backsliding. Drawing on “Informational Autocracy,” he argues that contemporary authoritarianism often relies less on mass repression than on “controlling narratives, selective coercion, and performance legitimacy.” Trump’s pressure on comedians, broadcasters, universities, and law firms, Professor Treisman suggests, reflects a familiar “inclination” toward intimidation—yet “the outcome was different,” because democratic institutions can still generate pushback. The core issue, he stresses, is whether US checks and civil society can withstand “executive aggrandizement”—the drive to “go beyond the formal or traditional powers of the office and consolidate control.”

Interview by Selcuk Gultasli

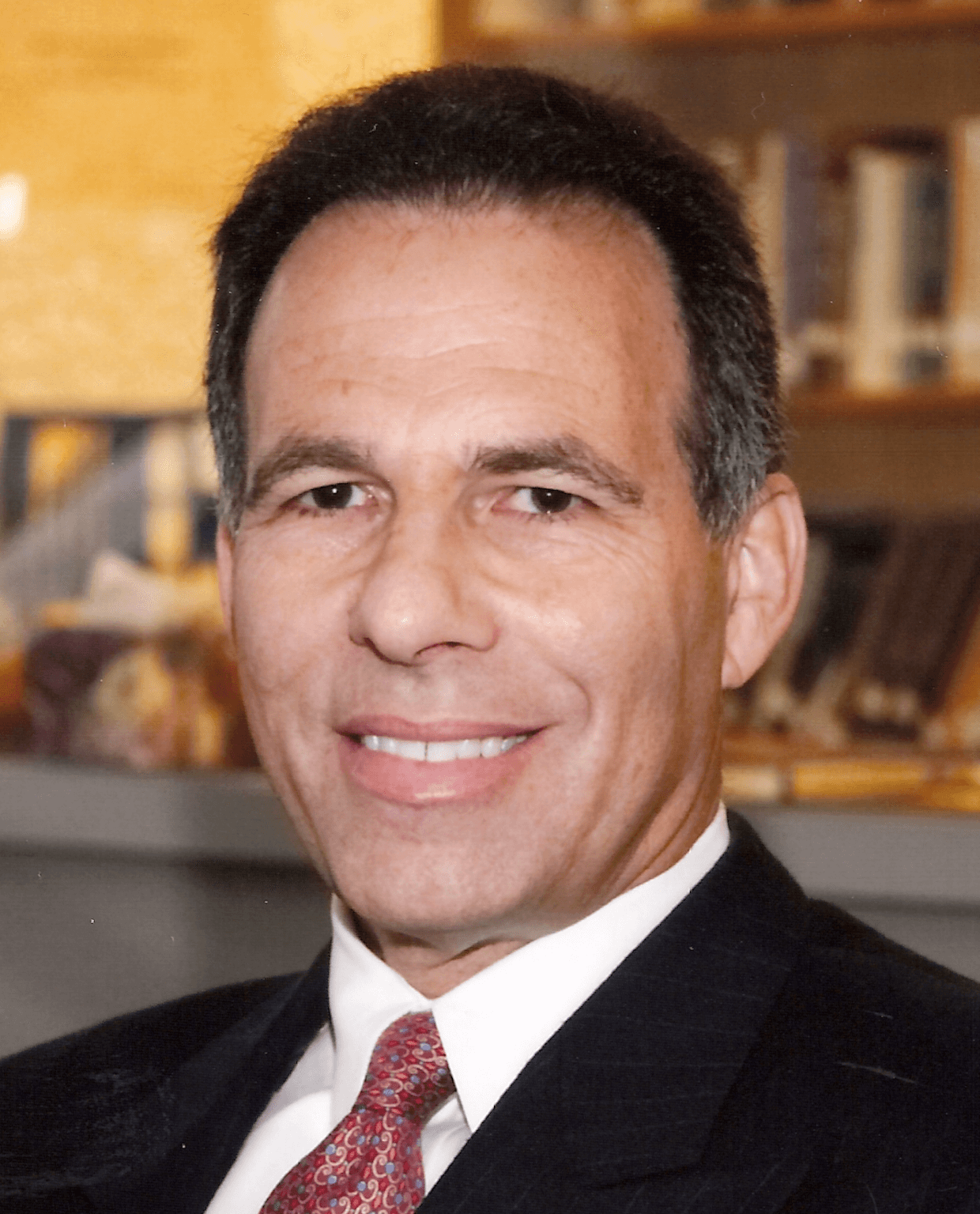

In an era marked by democratic backsliding, populist leadership, and the reconfiguration of informational power, the resilience of liberal democracy has become a central concern for scholars and policymakers alike. In this wide-ranging interview with the European Center for Populism Studies (ECPS), Professor Daniel Treisman—Professor of Political Science at the University of California, Los Angeles, and Research Associate at the National Bureau of Economic Research—offers a nuanced and empirically grounded assessment of how Donald Trump’s political strategy intersects with the logic of informational autocracy, executive aggrandizement, and democratic fragility.

Drawing on his influential work Informational Autocracy (co-authored with Sergei Guriev), Professor Treisman situates Trump’s threats against comedians, journalists, universities, and other institutional actors within a broader global pattern in which contemporary autocrats rely less on mass repression than on “controlling narratives, selective coercion, and performance legitimacy.” While Trump’s behavior often resembles that of informational autocrats, Professor Treisman emphasizes a crucial distinction: “So, while the inclination is similar, the outcome was different.” Episodes such as the pressure placed on late-night comedian Jimmy Kimmel reveal Trump’s “tendency to expand his power and to overstep traditional limits,” but also the continued—if uneven—capacity of democratic institutions and civil society to push back.

At the core of the interview lies a central analytical question: whether Trump’s conduct represents a failed or incomplete attempt to translate informational autocracy into a still-competitive democratic system. As Professor Treisman puts it, “The real question… is how resilient democratic societies and civil societies in democratic settings can prove to be in response to a leader who seeks what is often called executive aggrandizement.” This concern animates Professor Treisman’s discussion of selective intimidation, signaling repression, and the targeting of elite institutions—strategies designed to “score some visible victories” and deter broader resistance without resorting to outright censorship.

The interview also explores how new media ecosystems and the rise of a tech “broligarchy” complicate classical models of informational control. Professor Treisman highlights the hybrid arrangements created by platform ownership, algorithmic amplification, and strategic alignment between populist leaders and tech elites, noting that these dynamics allow political actors to undermine epistemic authority “without overt censorship.” While Trump has aggressively pressured legacy media through litigation and regulatory threats, his relationship with major technology firms remains more transactional and indirect—distinct from the tightly coordinated media control characteristic of full informational autocracies.

Beyond the US case, Professor Treisman offers comparative insights into charismatic populism in Latin America, bureaucratized authoritarianism in Russia and Hungary, and the structural uncertainties surrounding democratic decline. Reflecting on Democracy by Mistake, he cautions against deterministic readings of democratic erosion, stressing that “mistakes can be forces for good” as well as for authoritarian empowerment. In closing, Professor Treisman urges analytical humility: distinguishing between cyclical stress and durable authoritarian transformation, he argues, remains inherently uncertain, as history “does not come with labels that are easy to read.”

Taken together, this interview provides a sober, theoretically informed reflection on Trumpism, informational power, and the fragile boundaries between democratic contestation and authoritarian drift.

Here is the edited transcript of our interview with Professor Daniel Treisman, slightly revised for clarity and flow.

Trump Has Shown Every Inclination of Informational Autocrats

US President Donald Trump held a campaign rally at PPG Paints Arena in Pittsburgh, Pennsylvania, on November 4, 2024. Photo: Chip Somodevilla.[/caption]

US President Donald Trump held a campaign rally at PPG Paints Arena in Pittsburgh, Pennsylvania, on November 4, 2024. Photo: Chip Somodevilla.[/caption]

Professor Daniel Treisman, thank you so much for joining our interview series. Let me start right away with the first question: In “Informational Autocracy,” you argue that contemporary autocrats rely less on overt repression and more on controlling narratives, selective coercion, and performance legitimacy. How should we analytically situate Trump’s recent threats against broadcasters and comedians within this framework—are we observing an attempted translation of informational autocracy into a still-competitive democratic setting?

Professor Daniel Treisman: It’s very interesting to think about the various tactics and approaches that Trump has used and to compare them with the kinds of practices we see in informational autocracies. Clearly, there are many parallels, and a great deal looks very familiar.

For instance, in the early 2000s in Russia, President Putin was offended by a comedy show that portrayed him in an unflattering light. It was a satirical program called Kukly. He made it apparent to the authorities at that station that the show had to be canceled, and it was indeed canceled.

You mentioned Trump and comedy in the US, and we know about the recent Jimmy Kimmel case. What is interesting is that, on the surface, the situation looks very similar. Trump was offended by jokes Kimmel had been telling on his show, and he made it clear to the owners of the station that he thought Kimmel should be canceled. The head of the FCC (Federal Communications Commission) then put pressure on the channel.

The outcome, however, was different. Kimmel was taken off the air for a few days—about a week—and then reinstated. He returned very forcefully, speaking about freedom and the need for separation between government and television.

So, while the inclination is similar, the outcome was different. We often see in Trump a tendency to expand his power and to overstep traditional limits. The real question, for me, is how resilient democratic societies and civil societies in democratic settings can prove to be in response to a leader who seeks what is often called executive aggrandizement—going beyond the formal or traditional powers of the office and consolidating control in his own hands. This is precisely the process that characterizes democratic backsliding toward informational autocracy.

In that sense, this episode illustrates how Trump has shown every inclination to do the sorts of things that informational autocrats do, and if he were free to do so, I am sure he would move toward a more authoritarian or informationally autocratic setup. So far, however, we have seen a considerable degree of pushback and resilience on the part of American societal and democratic structures—through checks and balances and other mechanisms.

That said, it has been disappointing that we have not seen more resistance. The docility of Congress under Republican leadership and the questionable judgments of some courts have been troubling for those who view the White House’s attacks on the media, universities, and subnational governments as real threats to democracy. Those developments are certainly discouraging.

Nevertheless, across the board, we continue to see significant resistance, and that is what truly distinguishes full-fledged informational autocracies from developed democracies that manage to survive as democracies. It is not that democracies never produce populist politicians who want to push in an authoritarian direction—they do. These are politicians with authoritarian impulses, sometimes driven by narcissism or by a highly cynical political strategy. What ultimately varies is how far they are able to go.

Trump Is a Populist Proud of Defying Democratic Norms

Much of your work emphasizes that informational autocrats avoid crossing visible “red lines” that would trigger mass backlash. Does Trump’s increasingly explicit intimidation of the media suggest either miscalculation or a belief that democratic norms of speech protection have already eroded enough to absorb such shocks?

Professor Daniel Treisman: That’s a very good question, and it’s difficult to give a simple answer. I think there is sometimes an element of miscalculation. But let me step back for a moment—it’s not entirely clear that this is miscalculation, because we don’t fully understand what Trump’s strategy is.

In some ways, as I’ve said, he looks quite similar to various informational autocrats in authoritarian societies. But in other ways, he is quite different. As you noted, informational autocrats typically try not to appear overtly to be transgressing the rules of democracy. They present themselves as genuine, loyal democrats. They claim to follow constitutional procedures, often using legalistic language, and they frame their power grabs as legitimate exercises of authority for ostensibly valid purposes, such as protecting the public from pornography, terrorism, or similar threats.

The goal of genuine informational autocrats is not to challenge the system openly, but to create the impression that they are operating fully within democratic rules, while accusing their opponents of being undemocratic. They seek to project an image of competence, benevolence, and modernity, and to portray critics as those who threaten democracy.

There is an element of this in Trump’s behavior. He certainly accuses Democrats of being undemocratic. But there is also a distinct bravado—a deliberate defiance of democratic rules and norms. He openly states that when he pushes the Justice Department to investigate his critics and rivals, he is motivated by a desire for retribution. He rejects the idea of impartial justice and openly embraces the politicization of the justice system. In doing so, he often deliberately says things that are meant to provoke outrage and that are clearly undemocratic.

In this sense, he is not an authoritarian pretending to be a democrat. He is a populist politician who is, in some respects, openly proud of being undemocratic. He might argue that this is still democratic because his base supports him—and indeed, he does say that. But he also claims that there are no checks and balances, that the only constraint on him is his own morality, which amounts to a direct denial of the democratic system rather than a pretense of adherence to it.

So, it is difficult to determine whether this behavior reflects miscalculation or is simply part of his strategy, and whether he differs in this respect from informational autocrats. He appears to recognize that he is operating within a democratic system with a powerful civil society and has chosen to confront it directly and test its limits, rather than behaving like informational autocrats such as Orbán or early Putin, who presented themselves as ordinary democratic leaders supported by the majority while depicting their opponents as extremists seeking to undermine or overthrow democracy.

The Strategy Is to Score Visible Victories That Intimidate Others

Informational autocracies often rely on signaling repression—making examples rather than governing through mass coercion. How should we interpret Trump’s selective targeting of journalists, broadcasters, and universities in this light?

Professor Daniel Treisman: Well, it’s not just Trump, of course. This time he came in with a team that had thought carefully about how to attack various institutions in American society that they deeply opposed, including universities, law firms, some courts, and various subnational governments. The goal was quite directly to weaken those parts of what they viewed as a dominant political and cultural elite.

In part, yes, the strategy was to score some visible victories that would intimidate other members of a particular sector. So, you go after one university—like Columbia—very hard, essentially intimidating it into doing a deal, and then all the other universities would cave and negotiate individually with the Department of Education or the White House. There is an element here of signaling toughness, of attempting intimidation on a kind of wholesale scale.

That is quite similar to informational autocracies. There is less, as I mentioned earlier, of a concern with constraining actions to fit the appearance of democracy and normal democratic politics. Instead, there is a deliberate challenge—within the US context—to many of the legal underpinnings and long-standing understandings of the relationship between the presidency and other institutions, some of which have prevailed for decades or even centuries.

That said, this behavior is not entirely distinctive to authoritarian politics. All politicians try to signal their intentions by demonstrating, through particular cases, what their approach will be. What is distinctive here is that the goals of the Trump administration regarding universities and law firms have been very extreme. Essentially, they want greater control and a particular ideological orientation within universities, and they want to exclude intellectual approaches and philosophies they oppose.

With law firms, the aim is to discourage large, professional firms from opposing them or taking cases against them. That message was sent deliberately, through a barrage of attacks on different fronts very quickly during the first weeks and months of the administration, precisely in order to signal resolve and warn others.

So, in some respects, this does resemble informational autocracy. But it is also part of a broader phenomenon. Revolutionary politicians—or politicians seeking to implement fundamental changes—often come into office with a program and strike very hard at the outset to test how far they can go before resistance organizes and pushes back. Sometimes this is an effective strategy: if the initial blow is strong enough, opposition may fail to organize in time, allowing a new status quo to take hold.

Tech Billionaires Are Treated as Leverage Points

How does the rise of a tech “broligarchy”—with key digital venues controlled by figures such as Elon Musk, Mark Zuckerberg, and Jeff Bezos—complicate the classic logic of informational control? How do platform ownership, algorithmic governance, and strategic collaboration with populist leaders such as Donald Trump reshape the dynamics of informational autocracy? To what extent do these hybrid arrangements—combining formal pluralism with asymmetric visibility and amplification—enable populist actors to undermine epistemic authority and institutional trust without resorting to overt censorship?

Professor Daniel Treisman: That’s a great—and complicated—question. I think both informational autocracy and populism are closely tied to information and media. They tend to thrive in periods of technological change, when new media forms emerge.

In the early days of mass newspapers, for instance, that medium created new opportunities for populists to appeal to broader constituencies than had previously been mobilized in politics. We see something similar with the internet. As it became more developed and central to everyday life, it opened up new avenues for outsiders to engage in a different kind of politics. In democracies, this has been a major foundation of the recent populist wave.

In authoritarian contexts, similar opportunities have allowed authoritarian leaders to use the internet to communicate in new ways and to present themselves as democratic and competent through manipulation—much more effectively than old-style propaganda, which relied heavily on intimidation but was less successful in creating a convincing, all-encompassing political image. In this sense, new information technologies have reshaped not only perceptions of individual politicians but also broader understandings of the political system itself.

New information technology is therefore a central driver of the changes we are seeing in both democratic and authoritarian systems. In the American case, more specifically, the relationship between Trump and major technology firms—led by tech billionaires such as Elon Musk, Mark Zuckerberg, and others—is complex.

Going into Trump’s second term, there was something of a meeting of the minds between Silicon Valley and the Trump team. Many in the tech sector felt that the industry—and tech billionaires personally—had been mistreated by the Biden administration, citing what they perceived as hostility, attempts to censor right-wing or libertarian views, overregulation, and even the debanking of entrepreneurs involved in new areas such as cryptocurrency. This generated real antagonism toward the Democrats among parts of Silicon Valley, aligning well with the attitudes and plans of the Trump camp.

This was particularly evident in the case of Elon Musk, who was effectively given carte blanche to move aggressively against the federal bureaucracy and dismantle large parts of the government in a short period of time. At the same time, there have also been tensions—if not open confrontations—between the Trump administration and some tech leaders. Still, many of them appear to perceive shared opportunities.

Although Musk is no longer in the administration and clearly disagrees with Trump on certain issues, such as fiscal policy, he—and many other tech billionaires—continue to see opportunities in the current political environment. Not all, of course; some remain aligned with the Democrats. But many hold libertarian views and see Trump as more receptive to their ideas about technological development, the treatment of billionaires, and the balance between regulation and freedom.

The Trump administration has also actively sought to influence the media environment, particularly legacy media, by pressuring the owners of major networks. In ways reminiscent of informational autocracies, Trump has relied on defamation suits, libel actions, and other legal tools to intimidate and pressure media organizations.

With social media, however, the approach has been more indirect. Trump created his own social network and has shown little interest in directly regulating platforms such as X or Facebook. Instead, he treats tech billionaires much like other wealthy actors—as leverage points. If he wants something, he applies pressure, and as long as his demands are not too costly, they tend to comply. There is little incentive for them to engage in open confrontation.

That said, this does not amount to the kind of comprehensive, day-to-day control characteristic of full informational autocracies, where authorities maintain close, behind-the-scenes relationships with most media outlets and allow only marginal opposition voices without real influence or mass reach.

In short, the parallel between Trump and informational autocrats in this domain—much like in others—is imperfect. Some features are strikingly reminiscent of informational autocracy, while others differ substantially. These differences reflect both contextual factors—such as the scale and global reach of US-based technology companies compared to media in smaller authoritarian states—and Trump’s own distinctive political style.

Pluralism Survives, but the Playing Field Is Tilted

You and Sergei Guriev stress that modern autocrats seek to preserve the appearance of pluralism while hollowing it out. To what extent do Trump’s regulatory threats and litigation strategies resemble this logic of simulated legality rather than outright censorship?

Professor Daniel Treisman: I don’t think there is outright censorship. I don’t see outright censorship. It is much more a matter of trying to persuade—trying to send signals to the media to tone down criticism—or, as I mentioned, of confronting them with defamation suits or costly regulatory interference.

So, I think pluralism does exist; we do see pluralism in the United States. At the same time, there are constant efforts to tilt the playing field. Many of these efforts are not new. Republicans in the US political system have been doing this for a very long time—and not just Republicans; Democrats often use similar tools—to gain small, localized advantages, or sometimes larger ones, through practices such as gerrymandering or by refining voting laws in ways they believe will favor them.

All of that is, sadly, part of the American political tradition. Trump has often turbocharged this kind of behavior, as in the Texas mid-decade gerrymandering of congressional constituencies, but it is not radically new.

So, pluralism survives. There are efforts to win within a pluralist context, and there are also efforts to intimidate the opposition in this Trumpian, rather anarchic and blatant way. But I do not see real censorship or the kind of cohesive system we find in fully developed informational autocracies.

It is much more anarchic. Who knows how things will develop? Nobody can predict the future, but at present, it looks rather different to me.

Mistakes Are Easier to See in Retrospect

In “Democracy by Mistake,” you highlight how democracy often emerges—and collapses—not through design but through elite error. Looking at the US today, which elite misjudgments (judicial restraint, partisan polarization, media fragmentation) most plausibly explain the vulnerability of democratic guardrails?

Professor Daniel Treisman: In the US, we don’t really know. We don’t yet know whether what we are witnessing is an intense challenge to the democratic system—one that the forces of democracy will ultimately defeat—or whether we are observing a more gradual, long-term erosion in the quality of American democracy. For now, we have to reserve judgment.

Mistakes are much easier to identify in retrospect than as they are happening. One could argue that Trump has made many mistakes, and one could equally argue that leaders of democratic forces in the US have made many mistakes as well. Mistakes are universal and ubiquitous. Not all mistakes lead to the collapse of a regime—far from it.

For that reason, it is difficult to look at the US system and identify a single fateful mistake whose consequences we will clearly see five years from now. The main message of that article, for the current situation is this: we should not assume that everything is rational or part of a carefully crafted plan. Mistakes can be forces for good when they contribute to the failure of anti-democratic politicians and regimes. But mistakes can also be forces for harm when they enable or empower authoritarian actors.

Trump Fits the Family of Charismatic Populists

Comparatively, how should we distinguish Trump’s personalization of power from Latin American charismatic populism (e.g., Chávez) and from the more bureaucratized authoritarianism of leaders like Putin or Orbán?

Professor Daniel Treisman: Clearly, Trump isn’t very good at bureaucracy. There are some people in his administration who do bureaucracy well—Russell Vought, head of the Office of Management and Budget, for example—and that is why they have had a greater impact on the federal bureaucracy than in Trump’s first term. But as an individual, Trump is clearly not a very systematic bureaucratic operator.

In that respect, he is more like charismatic populists. Putin does not have this kind of anarchic character, and Orbán is also much more systematic and skilled in statecraft and bureaucratic politics—although, of course, Orbán is also an effective populist and could be described by some as charismatic.

With regard to Chávez and other Latin American populists, Trump is obviously not quite like the left-wing populists of Latin America. Chávez had a revolutionary, Bolivarian discourse and a semi-Marxist worldview, and he maintained close emotional and political ties with other left-wing administrations across Latin America and Central America. That is quite different from Trump. Trump, after all, arrested the leader of the regime that evolved out of Chávez’s rule.

That said, there are right-wing populists in Latin America as well—Bolsonaro, for example—who are much more similar to Trump. Although Bolsonaro has more of a military background, in terms of personality and political approach Trump is closer to that type. Even when compared with left-wing populists like Chávez, Trump shares the fact that he is a populist who appeals—at least rhetorically, if not always through policy—to the masses of ordinary people whom he claims have been neglected and disrespected. That was also a central part of Chávez’s appeal.

So, I would say that Trump is distinctive in many ways, but he also clearly fits within the broader family of charismatic populists.

History Does Not Come with Labels

Finally, drawing on your work on predictability and early warning, which indicators should scholars prioritize to distinguish between episodic democratic stress and the onset of durable authoritarian transformation?

Professor Daniel Treisman: I should say at the outset that my work on predictability and prediction is quite limited, but I have been thinking about what is a philosophically deep question: the difference between trends and cycles. And I think the basic answer is that there is no definitive answer. You cannot know whether what appears to be changing at a particular moment represents a shift in the underlying trend—a breakpoint toward a new trajectory—or merely a cyclical fluctuation.

We see this across many spheres. If we look at the spread of democracy over the past 200 to 250 years—focusing here on the West, on Europe and the Americas—we observe both a very strong upward trajectory, from almost no democracies (depending, of course, on how one defines democracy) to a much larger number of countries that can be considered at least electoral democracies.

At the same time, we have seen waves: periods in which the share of democracies increases, followed by periods in which it declines or at least plateaus. In each of these moments of cyclical slowdown or reversal, people have proclaimed, “This is the end of democracy.” In every reverse wave, there has been fear that what we were witnessing was not just a cycle but a permanent shift away from democracy as a long-term reality. So far, those fears have been proven wrong in each case.

That said, I do not think there is any particular indicator or observational technique that can reliably tell us whether a change will be permanent or temporary. This reflects a deep feature of the world we live in and of our ability to understand history from within, rather than in retrospect. Looking backward, it is easy to apply statistical tests or analytical frameworks to determine whether a change was cyclical or represented a trend shift—it is almost trivial. But as history unfolds, I do not think there is any way to know for sure whether we are seeing something genuinely new or something that is repeating in a familiar pattern.

Different scholars have developed different mental models of the world, emphasizing one perspective or the other. Some believe in progress; others emphasize stagnation or endless repetition. This tension has run through Western philosophy and social science from the very beginning. My own position is to emphasize the high degree of uncertainty involved, and to push back against claims that we can clearly identify a change in the trend when it may well be a change in the cycle.

This is why I have written critically about responses to what some describe as a democratic recession, or even a reverse wave of democracy, in recent years. I think the evidence has not—or at least has not yet—fully supported such claims. There is growing evidence of a slowdown in the rate of democratic advance, and probably some degree of average backsliding. But there is an important distinction between backsliding and the long-term collapse of democracy.

So, we all need to remain attentive to this distinction and recognize that events, as they unfold, do not come with easily readable labels. We should have some respect for long-term trends, without assuming that they will automatically continue. There does seem to be a certain structural logic at work in many domains. The same is true of the stock market: there are both trends and cycles, and it is impossible to know on any given day whether a sharp drop is cyclical or part of a new trend. As we know, people have made—and lost—trillions of dollars betting on precisely that distinction.